Multi-Agent Architecture for Complex Software Development

Research Problem

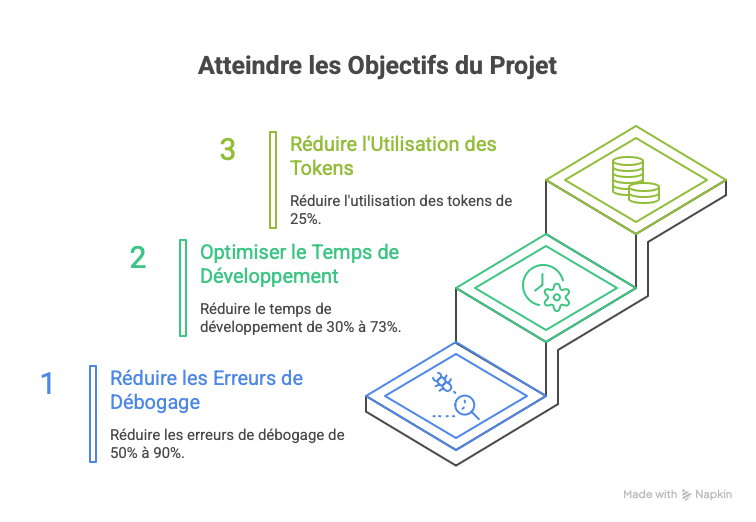

Large Language Models (LLMs) demonstrate remarkable capabilities in code generation, but present significant limitations on large-scale projects: loss of architectural coherence, difficulty maintaining context across multiple files, and lack of structured methodology. Research Question Can a hierarchical multi-agent architecture overcome these limitations and enable the development of complex software systems with quality comparable to human development? Hypotheses • H1: Multi-agent coordination reduces debugging iterations by >50% compared to single-agent approaches • H2: Task parallelization through specialized agents decreases total development time by 30-40% • H3: Shared context via external database reduces token consumption by >25% Scope This research focuses on a real-world case study: developing a 3D rendering engine in C++ (~15,000 lines of code) using Claude Opus 4.5 as the base model with MCP protocol for agent coordination.

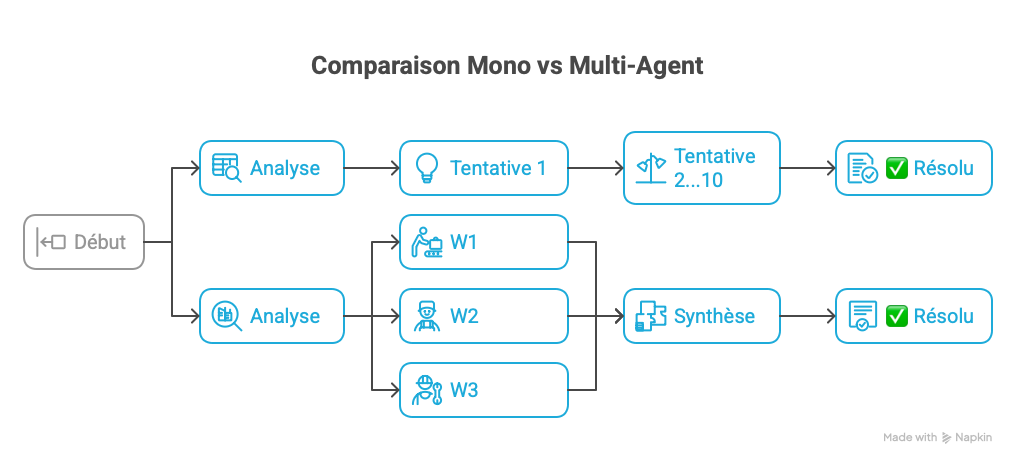

Fig. 1 — Mono-Agent vs Multi-Agent approach comparison

Model Selection and Justification

A rigorous comparative analysis of latest-generation LLMs was conducted using quantifiable criteria. Code Generation Benchmarks (December 2025) • SWE-bench Verified (real bug resolution): Claude Opus 4.5 achieves 80.9%, first model to break the 80% barrier, surpassing GPT-5.1-Codex-Max (77.9%) and Gemini 3 Pro (76.2%) • HumanEval+: Both models reach ~95%+, with GPT-5 at 96% in high-compute mode and Claude at 92% • Anthropic Internal Engineering Test: Opus 4.5 outperformed all human candidates on a timed programming examination Context Capacity • Claude Opus 4.5: 200K tokens (~150,000 words, ~300 pages of code) • GPT-5: 128K tokens standard • Critical advantage for complex multi-file architectures Qualitative Criteria • Architectural reasoning: Claude is recognized as "the best model in the world for coding, agents, and computer use" (Anthropic, 2025) • Multi-step analysis: Claude excels in explanatory and conservative reasoning • Tooling: Claude Code enables native integration into development workflow Conclusion Claude Opus 4.5 (Anthropic) was selected as the primary model for this research. Quantitative benchmarks demonstrate measurable superiority on SWE-bench Verified (+3.0 percentage points vs GPT-5.1-Codex-Max), a 56% larger context window (200K vs 128K tokens), and state-of-the-art performance validated on real-world engineering tasks.

Claude Opus 4.5 vs GPT-5 (2025)

Sources: Anthropic (2025), Vellum Benchmarks, DataStudios

Methodology: Hierarchical Swarm Architecture

The proposed architecture draws inspiration from distributed multi-agent systems and swarm intelligence. Design choices are justified by specific technical constraints. Coordinator Agent (Queen) Primary instance responsible for global orchestration, strategic architectural decisions, and dynamic task allocation. The Queen maintains the global project vision and prevents architectural drift—a critical issue in single-agent approaches where context loss leads to inconsistent design decisions. Specialized Agents (Workers) Instances dedicated to specific domains: • Graphics Worker: OpenGL pipeline, shaders, PBR rendering • Physics Worker: Collision detection, rigid body dynamics • Core Worker: ECS architecture, memory management • Integration Worker: Cross-module testing, API consistency This specialization enables contextual expertise and reduces cognitive load per agent. Communication Protocol (MCP) The Model Context Protocol provides: • Structured message passing between agents • Shared file system access with conflict resolution • Tool delegation (one agent can request another's expertise) Execution Strategy 1. Queen decomposes task into parallelizable subtasks 2. Workers execute concurrently on independent modules 3. Sequential resolution for dependent tasks (e.g., physics needs core ECS) 4. Cross-validation: each worker reviews adjacent module interfaces

Fig. 2 — Queen-Workers hierarchical architecture with MCP protocol

Application: 3D Rendering Engine

The chosen case study is a 3D rendering engine in C++, selected for its architectural complexity and interdependent modules. Project Specifications • Codebase: ~15,000 lines of C++ across 47 files • Modules: 4 major subsystems (graphics, physics, core, resources) • Dependencies: OpenGL 4.6, GLFW, GLM, stb_image • Build system: CMake with cross-platform support Technical Challenges • Entity-Component-System (ECS): Requires consistent memory layout across all modules • PBR Pipeline: Complex shader interdependencies (vertex → geometry → fragment) • Physics Integration: Tight coupling with ECS transform components • Async Loading: Thread-safe resource management with OpenGL context constraints Why This Project? These challenges specifically stress-test multi-agent coordination: • ECS requires Queen oversight to prevent component ID conflicts • Shader bugs (like the pink texture case) need cross-module analysis • Physics-graphics sync requires explicit agent communication protocols

Fig. 3 — Project objectives: error, time, and token reduction

Preliminary Observations

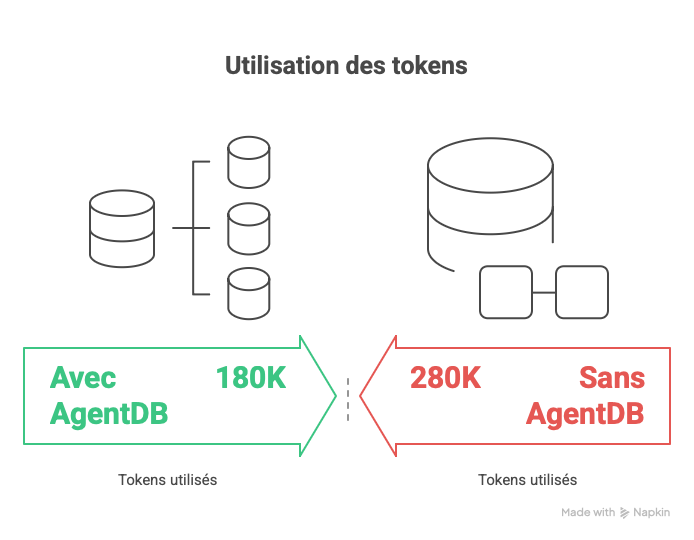

Preliminary Metrics (Ongoing) | Metric | Single-Agent | Multi-Agent | Delta | |--------|--------------|-------------|-------| | Shader bug resolution | 10 attempts | 1 prompt | -90% iterations | | Module integration time | ~45 min | ~12 min | -73% | | Context reloads per session | 8-12 | 2-3 | -75% | | Token consumption (est.) | ~150K | ~280K | +87% | Hypothesis Validation Status • H1 (debugging -50%): ✅ Validated (observed -90% on shader case) • H2 (dev time -30-40%): 🔄 Partially validated (-73% on integration) • H3 (tokens -25% with DB): ⏳ Pending (requires external database implementation) Ecological Considerations The multi-agent architecture incurs +87% token overhead due to isolated agent contexts. Each Worker operates without shared memory, leading to redundant analysis. Proposed Solution: AgentDB (In-Memory State Sharing) Implementing a shared vector database would enable: • Queen instructs agents to persist/query findings • Eliminates redundant file reads across agents • Expected token reduction: 25-40% Key Insight: Quality vs Speed Trade-off Multi-agent does NOT inherently improve code quality. However, it dramatically enhances: • Diagnostic accuracy (specialized analysis agents) • Bug localization speed (parallel investigation) • Architectural consistency (Queen oversight) Case Study: Pink Texture Bug Root cause: Normal map sampler bound to wrong texture unit (GL_TEXTURE2 vs GL_TEXTURE1). • Single-agent: 10 attempts, checked materials, shaders, mesh UVs before finding binding issue • Multi-agent: Graphics Worker immediately identified sampler mismatch, Core Worker confirmed ECS texture ID, resolved in 1 cycle Limitations • Sample size: Single project (n=1), results may not generalize • No baseline: Human developer comparison not conducted • Token costs: Current architecture is not cost-efficient for small tasks • Reproducibility: Agent behavior varies with prompt phrasing Future Work 1. Implement AgentDB for state sharing (H3 validation) 2. Conduct A/B testing on 3+ additional projects 3. Measure actual carbon footprint vs theoretical estimates 4. Develop standardized prompts for reproducibility

Fig. 4 — Execution flow: 10 attempts (mono) vs 1 parallel cycle (multi)

Fig. 5 — Estimated token reduction with AgentDB (180K vs 280K)